Setting up Braintrust in your AWS Account

This guide walks through the process of setting up Braintrust in your AWS account.

Before following these instructions, make sure you have account access, and are able to visit the app (opens in a new tab).

There are two methods you can follow:

- Use the

braintrustcommand-line (CLI) tool. This is the recommended method and requires just a single command. - Use the AWS console. If you need more control or cannot get AWS credentials for the CLI, this option allows you to run the whole process within the CLI.

Setting up the stack: CLI

Install the CLI

If you have not already, install the latest CLI:

pip install --upgrade braintrust[cli]

# verify the installation worked

braintrust --helpCreate the CloudFormation stack

There are just a few relevant parameters you should consider for most use cases:

- A stack name. This is arbitrary and allows you to refer back to the stack later. A name like "braintrust" or "braintrust-dev" should be fine.

--org-nameshould be the name of your organization (you can find this in your URL on the app, e.g.https://www.braintrustdata.com/app/<YOUR_ORG_NAME>/...). This will ensure that only users with access to your organization can invoke commands on your Braintrust endpoint.--provisioned-concurrencythe number of lambda workers to keep running in memory. This is useful if you expect to have a lot of concurrent users, or if you want to reduce the cold-start latency of your API calls. Each increment costs about $40/month in AWS costs. The default is 0.

braintrust install api --create <YOUR_STACK_NAME> \

--org-name <YOUR_ORG_NAME> \

--provisioned-concurrency 1This command is idempotent. If it fails, simply re-run the command without the

--create flag, and it will resume tracking the progress of your

CloudFormation stack. If your stack enters a failed state, e.g. it fails to

create, please reach out to support.

Once the install completes, you'll see a log statement like

Stack with name braintrust has been updated with status: UPDATE_COMPLETE_CLEANUP_IN_PROGRESS

Endpoint URL: https://0o71finhla.execute-api.us-east-1.amazonaws.com/api/Save the endpoint URL. You can now skip ahead to the Verifying the stack section.

Setting up the stack: CloudFormation console

Create the CloudFormation

The latest CloudFormation template for Braintrust is always available at this URL:

https://braintrust-cf.s3.amazonaws.com/braintrust-latest.yamlTo start, click this link (opens in a new tab) which will open up the CloudFormation setup window. If you prefer, you can also “create stack” directly and use specify https://braintrust-cf.s3.amazonaws.com/braintrust-latest.yaml (opens in a new tab) as the S3 template link.

These instructions walk through how to setup through the AWS UI, but you are welcome to install it via the command line too if you prefer.

You need to specify a few key parameters to help the stack connect to Kafka and your data warehouse. The most important one is OrgName which will allow users to authenticate. If you need any help configuring these parameters, check with your support contact at Braintrust. These parameters are editable later, so it's okay to leave them blank and update them later.

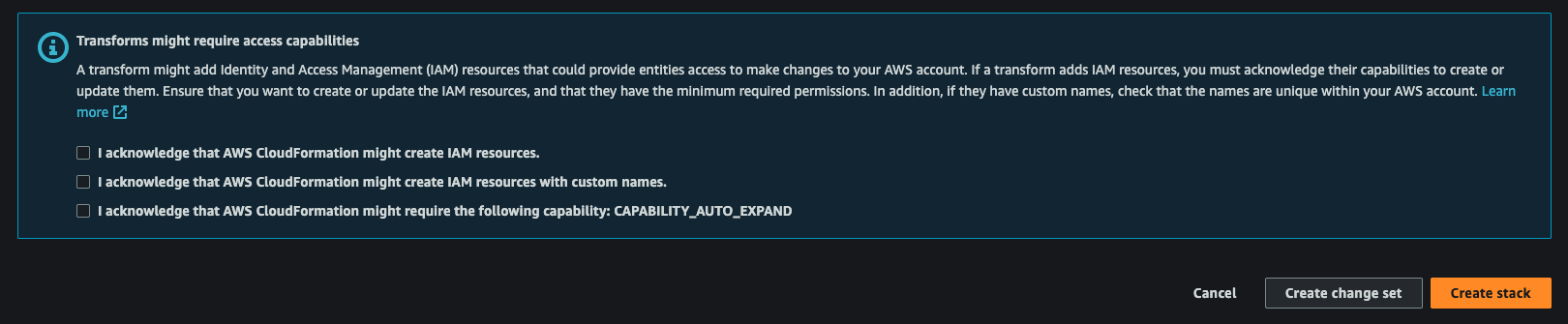

Once you fill in the parameters, accept the acknowledgments and click “Create stack” to start creating the template. This can take a few minutes (up to ~10) to provision the first time.

Behind the scenes, the template sets up a few key resources:

- A VPC with public & private subnets for networking

- A lambda function which contains the logic for executing Braintrust commands

- An API gateway that runs commands against the lambda function

Getting the Endpoint URL

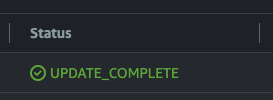

Once the stack is provisioned, you should see UPDATE_COMPLETE as its status:

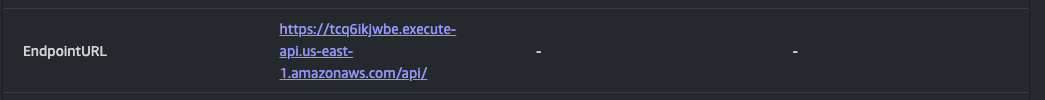

Click into the stack, navigate to the “Outputs tab”, and copy the value for EndpointURL. We’ll use this to test your endpoint and configure Braintrust to access your org through it. For the rest of the doc, we’ll refer to it as <YOUR_ENDPOINT_URL>.

Verifying the stack

Run the following command to test that the stack is running. The first time you run it, AWS may do some setup work to provision your lambda function, and it can take up to 30 seconds to run.

curl -X GET '<YOUR_ENDPOINT_URL>'You should see an error like {"message":"Unauthorized"}. That's expected!

Configure your organization's endpoint

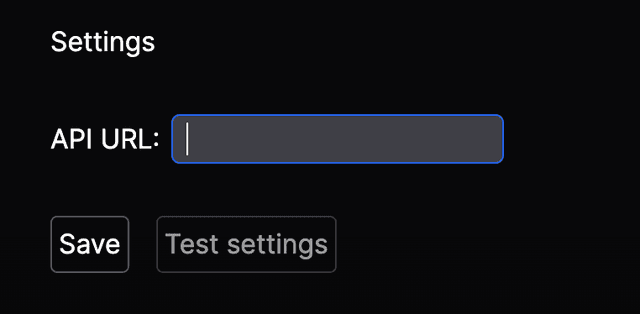

Visit your organization's settings page at http://www.braintrustdata.com/app/settings (opens in a new tab). You should see an entry for the API URL. Paste <YOUR_ENDPOINT_URL> from above here and click “Save”. If you refresh the page, you should see the new value set by default.

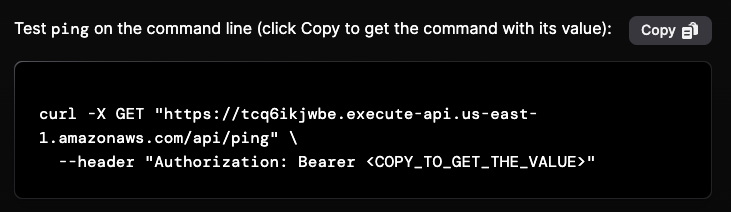

Testing the endpoint

Click the “Test settings” button, which will take you to a new page that runs an API command from your browser to ensure that everything is setup correctly. It also includes a command that you can copy and run in your terminal. If everything worked correctly, the page should look like:

If you experience any errors, please reach out to support@braintrustdata.com to diagnose further.

Test the application end-to-end

Hooray! At this point you should be able to test the full application. The easiest way to do this is by using the Python SDK.

This simple Python script will run a full loop of using Braintrust and setting up an experiment.

import braintrust

# NOTE:

# * You can specify your API key in the `api_key` parameter, or set it as an environment variable

# `BRAINTRUST_API_KEY`. If you don't specify it, the SDK will look for the environment variable.

# * You should not specify api_url in the SDK. It will automatically use the value you set in the

# settings page.

experiment = braintrust.init(project="SetupTest", org_name="my_org.com", api_key="sk-****")

experiment.log(

inputs={"test": 1},

output="foo",

expected="bar",

scores={

"n": 0.5,

},

metadata={

"id": 1,

},

)

print(experiment.summarize())Maintaining your installation

Most new Braintrust releases do not require stack updates. Occasionally, however, you will need to update the stack to get access to new features and performance enhancements. Like installation, you can update the stack through either the CLI or AWS console.

Using the Braintrust CLI

To update your stack, simply run (replacing <YOUR_STACK_NAME>):

braintrust install api <YOUR_STACK_NAME> --update-templateYou can also use this command to change parameters, with or without template updates. For example, if you

want to allocate provisioned concurrency 8 to your lambda functions, run

braintrust install api <YOUR_STACK_NAME> --provisioned-concurrency 8Using the AWS interface

You can also update the stack directly through AWS. Their docs (opens in a new tab) walk through how to update through the console

and the aws cli.

The rest of the guide covers topics only needed for advanced configurations.

Advanced configuration

The rest of this guide covers advanced topics, only needed if you plan to run Braintrust against an existing queue or warehouse system. In most cases, you do not need to configure any of these options.

Configure network access (VPC)

To permit incoming and outgoing traffic between Braintrust’s lambda functions and your cloud resources (Kafka, Redshift/Snowflake), you can either run everything in the same VPC or setup VPC peering.

If you specified ManagedKafka=true while creating the CloudFormation, then

the new Managed Kafka instance will automatically be in the same VPC and

security group as the lambda function.

VPC Peering

When you create your Braintrust CloudFormation, it automatically creates a VPC with the same name as your CloudFormation. You can create a Redshift instance in this VPC, and skip this section. However, if you'd like to connect Braintrust to an existing data warehouse that's in another VPC, you need to setup VPC peering.

You can access the Braintrust VPC’s name and ID from the CloudFormation’s Outputs tab (named pubPrivateVPCID).

AWS has a comprehensive guide (opens in a new tab) for configuring peering. Follow the instructions for

- Create a VPC peering connection (opens in a new tab)

- Accept a VPC peering connection (opens in a new tab)

- View your VPC peering connections (opens in a new tab)

- Update your route tables for a VPC peering connection (opens in a new tab)

- Make sure to update the route tables in both VPCs.

- Update your security groups to reference peer security groups (opens in a new tab). We recommend allowing “All Traffic” from Braintrust’s VPC.

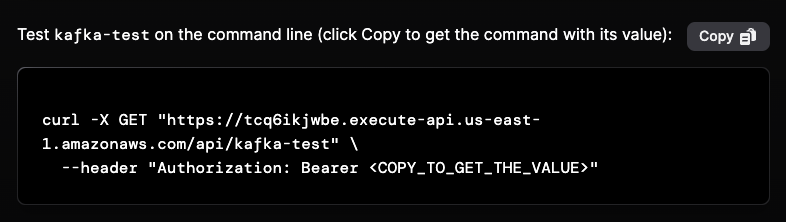

Test Kafka

Once you setup VPC peering, you should be able to test that the Kafka connection is working by running the kafka-test command via cURL on the settings page.

Troubleshooting

- If you continue to see errors after updating the VPC peering group, you may need to update your CloudFormation template (which will effectively reboot your Lambda functions). You can do this by triggering an update on the CloudFormation and letting it run. You may need to change a stack parameter and then change it back to trigger the updates.

- You can manually test network settings by booting up an EC2 machine in the Braintrust VPC and installing Kafka and database client drivers there to test connectivity. Make sure to assign a public IP to the instance and use the public subnet of the VPC while initializing.

Setup streamed ingest from Kafka to your data warehouse

First, create a topic (opens in a new tab) in Kafka named braintrust (or something similar).

If you run the kafka-test command (instructions

above (opens in a new tab)),

then the endpoint should automatically create the topic for you. You may see

"in_progress": true in its response the first time you run it. This is

expected, as Kafka can take some time to initialize a connection. You should

just run the command again until in_progress is false.

Technically, this is configurable and must be specified as a parameter in your CloudFormation template as well. We refer to it below as <YOUR_TOPIC_NAME>. Once you’ve setup a topic, the instructions below will help you connect it to your cloud data warehouse.

If you are using Redshift, it’s very important that the topic name is lowercase. Redshift is case insensitive by default, and the background processes it runs to update your streaming materialized view will fail silently if the topic is not lowercase.

Redshift

RedShift natively supports streaming from Kafka (specifically MSK (opens in a new tab)). The AWS docs contain instructions on how to set it up here (opens in a new tab).

Currently, Redshift only seems to support IAM authentication to Kafka. To

set this up, you should create an IAM role that Redshift can assume, and

attach the custom policy outlined in the doc (and copied below) to it. Make

sure to replace 0123456789 with your AWS Account ID:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "MSKIAMpolicy",

"Effect": "Allow",

"Action": [

"kafka-cluster:ReadData",

"kafka-cluster:DescribeTopic",

"kafka-cluster:Connect"

],

"Resource": [

"arn:aws:kafka:*:0123456789:cluster/*/*",

"arn:aws:kafka:*:0123456789:topic/*/*/*"

]

},

{

"Sid": "MSKPolicy",

"Effect": "Allow",

"Action": ["kafka:GetBootstrapBrokers"],

"Resource": "*"

}

]

}Next, copy your MSK cluster’s ARN (<YOUR_MSK_ARN>) and the newly created IAM role’s ARN (<YOUR_IAM_ARN>) and run the following commands. You can use

Redshift's built-in query editor (opens in a new tab) to run these queries:

CREATE EXTERNAL SCHEMA braintrust_streaming

FROM MSK

IAM_ROLE '<YOUR_IAM_ARN>'

AUTHENTICATION iam

CLUSTER_ARN '<YOUR_MSK_ARN>';

CREATE MATERIALIZED VIEW braintrust_logs AUTO REFRESH YES AS

SELECT "kafka_partition",

"kafka_offset",

"kafka_timestamp_type",

"kafka_timestamp",

"kafka_key",

JSON_PARSE("kafka_value") as data,

"kafka_headers"

FROM braintrust_streaming."<YOUR_TOPIC_NAME>";If you see errors like Failed to acquire metadata: Local: Broker transport,

then the IAM policy has likely not been setup correctly.

Snowflake

Snowflake supports reading data from Kafka via Snowpipe (opens in a new tab).

Once you’ve setup your warehouse, you can test writing events into Kafka and they should appear in the streaming table.