Prompt playground

The prompt playground is currently only available to enterprise users.

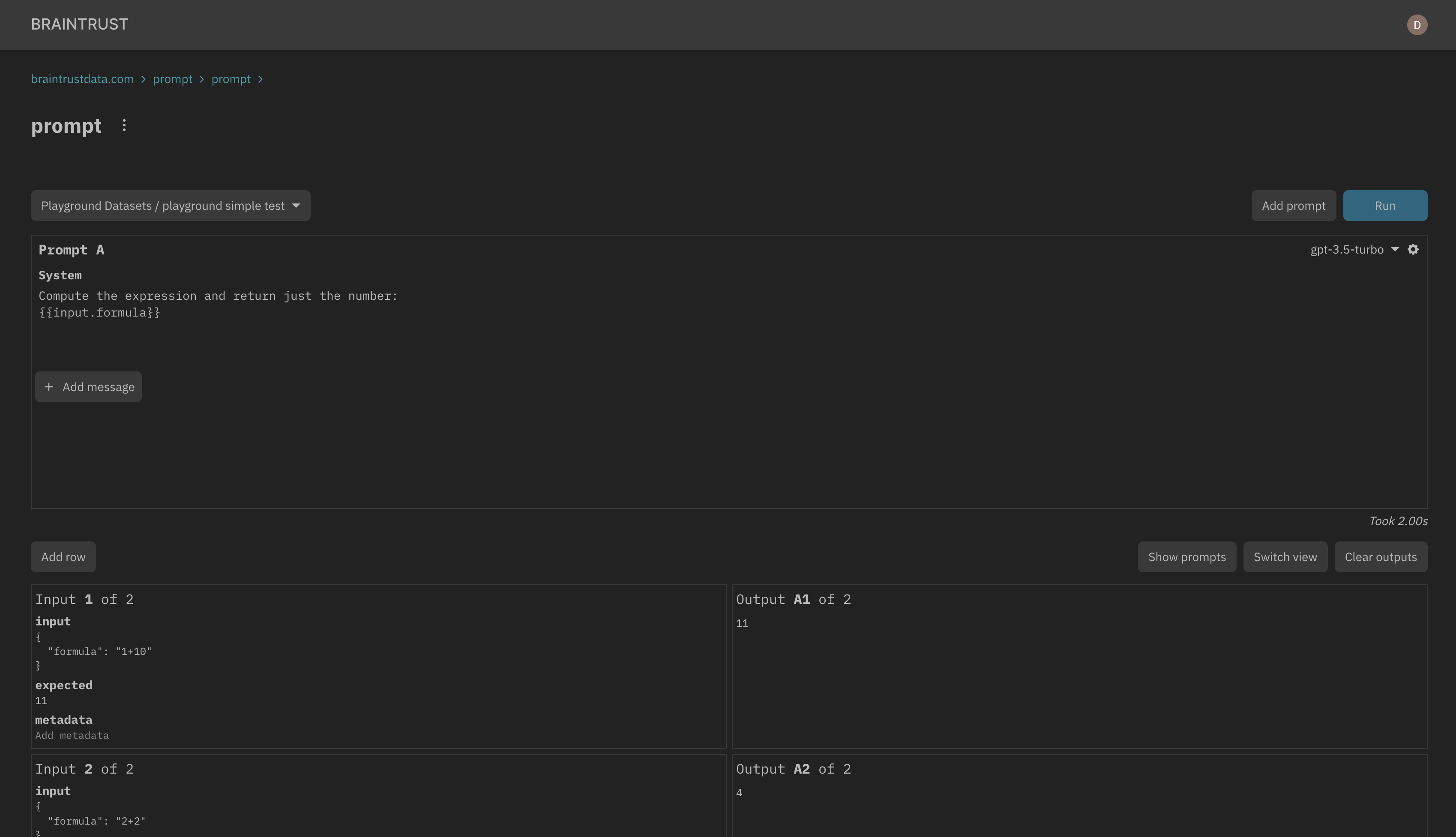

The prompt playground is a tool for exploring, comparing, and evaluating prompts. The playground is deeply integrated within Braintrust, so you can easily to try out prompts with data from your datasets.

The playground currently supports OpenAI and Anthropic models. We're working on adding support for more models. Please reach out to us if you'd like to see support for a specific model.

Creating a prompt session

The prompt playground organizes your work into sessions. A session is a saved and collaborative workspace that includes one or more prompts and is linked to a dataset.

Sharing prompt sessions

Prompt sessions are designed for collaboration and automatically synchronize in real-time.

To share a prompt session, simply copy the URL and send it to your collaborators. Your collaborators must be members of your organization to see the session. You can invite users from the settings page.

Writing prompts

Each prompt includes a model (e.g. GPT-4 or Claude-2), a prompt string or messages (depending on the model), and an optional set of parameters (e.g. temperature) to control the model's behavior. When click "Run" (or the keyboard shortcut Cmd/Ctrl+Enter), each prompt runs in parallel and the results stream into the grid below.

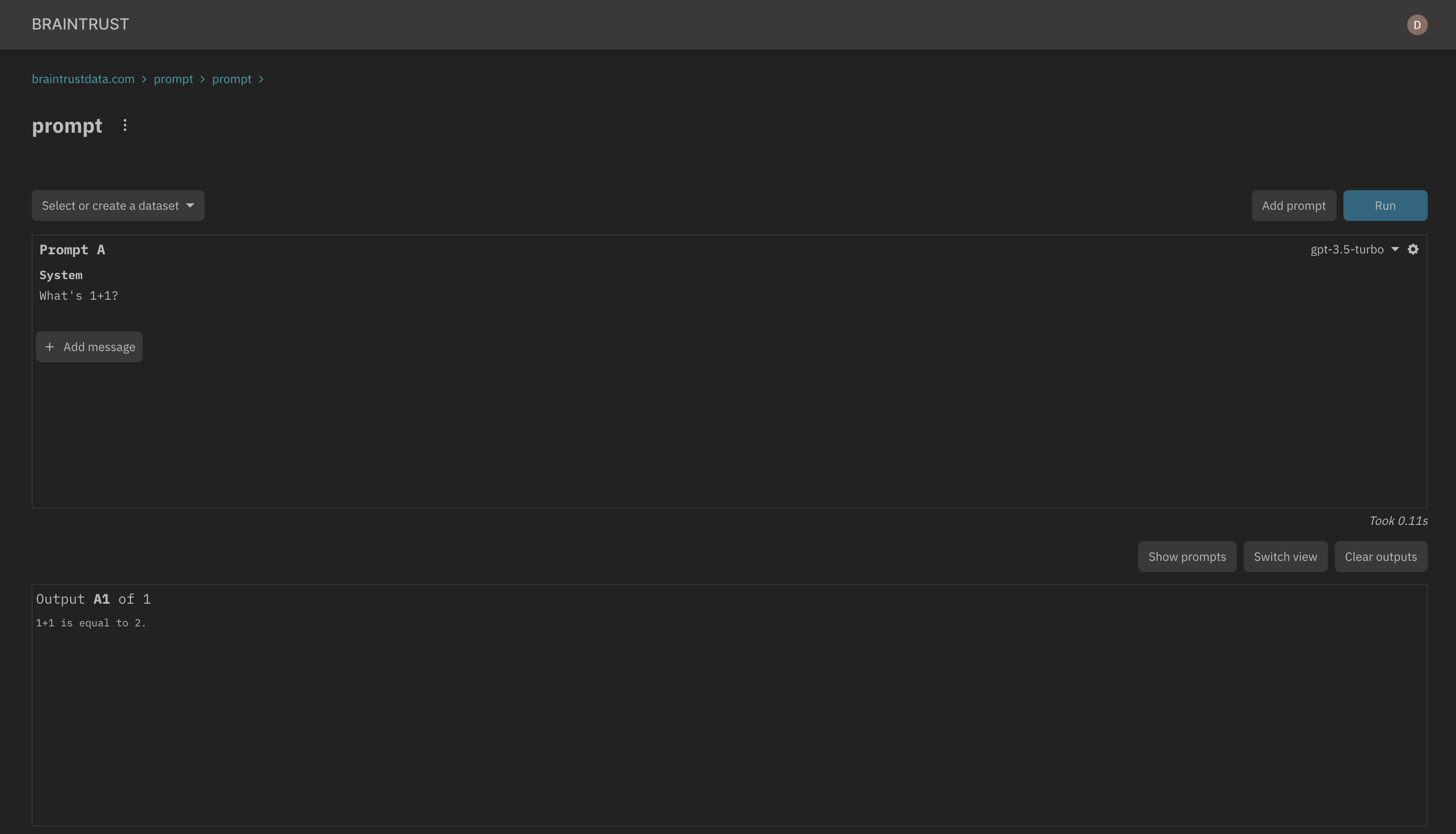

Without a dataset

By default, a prompt session is not linked to a dataset, and is self contained. This is similar to the behavior on other playgrounds (e.g. OpenAI's). This mode is a useful way to explore and compare self-contained prompts.

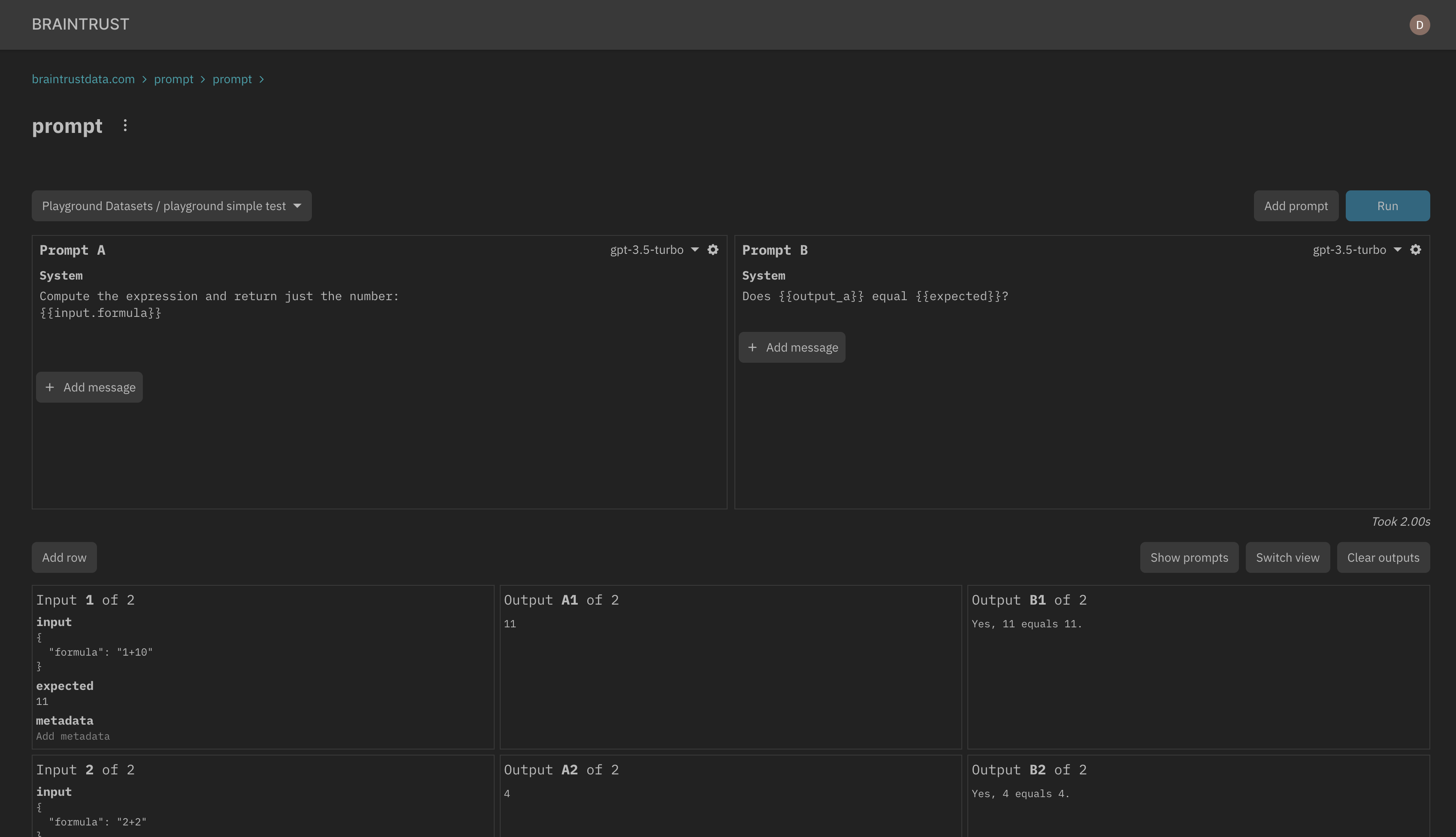

With a dataset

The real power of Braintrust comes from linking a prompt session to a dataset. You can link to an existing dataset or create a new one from the dataset dropdown:

Once you link a dataset, you will see a new row in the grid for each record in the dataset. You can reference the

data from each record in your prompt using the input, output, and metadata variables. The playground uses

mustache (opens in a new tab) syntax for templating:

Each value can be arbitrarily complex JSON, e.g.

Referencing outputs

Each prompt can reference the output of other prompts in the session (e.g. output_a). This is useful for

validating outputs or chaining prompts together. For example, we can add a grading prompt to the previous example

to verify that the output matches an expected value: